I am trying to write an expression which will mimic the mv2DToxik motion vectors, but for hardware particles. Therefore removing the need to instance some geo to get the motion vectors to render.

I have not got very far before I came across some vector maths...

Here is the setup which works for a camera pointing exactly down the z-axis.

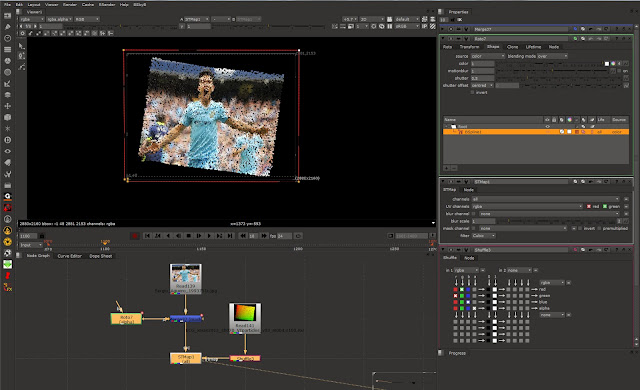

Here is the render loaded into Nuke. Notice the RGB values.

I now need to find a way to convert World Velocity to Screen Space Velocity.

In a MEL expression. Hmmm, time to ask the

Forum

After some great advice from Zoharl, I grabbed some code which uses the camera's worldInverseMatrix to transform the velocity vector.

Here is the expression:

//multiplier

float $mult=0.5;

//get the particle's World Space velocity

vector $vel=particleShape1.worldVelocity;

float $xVel=$vel.x;

float $yVel=$vel.y;

float $zVel=$vel.z;

// create particle's velocity matrix which is in World Space

matrix $WSvel[1][4]=<<$xVel,$yVel,$zVel,1>>;

// get the camera's World Inverse Matrix

float $v[]=`getAttr camera1.worldInverseMatrix`;

matrix $camWIM[4][4]=<< $v[ 0], $v[ 1], $v[ 2], $v[ 3]; $v[ 4], $v[ 5], $v[ 6], $v[ 7]; $v[ 8], $v[ 9], $v[10], $v[11]; $v[12], $v[13], $v[14], $v[15] >>;

//multiply particle's velocity matrix by the camera's World Inverse Matrix to get the velocity in Screen Space

matrix $SSvel[1][4]=$WSvel * $camWIM;

vector $result = <<$SSvel[0][0],$SSvel[0][1],$SSvel[0][2]>>;

float $xResult = $mult * $result.x;

float $yResult = $mult * $result.y;

float $zResult = $mult * $result.z;

//rgbPP

particleShape1.rgbPP=<<$xResult,$yResult,0>>;

So far it seems to be working, but I will try to test it and see if it breaks down.

Thanks to Zoharl on the CGTalk forum and to

xyz2.net and

185vfx who came up with the original matrix manipulation code.